publications

automatically generated publication list from NASA's ADS service, powered by jekyll-scholar.

2025

- The cosmological analysis of X-ray cluster surveys: VI. Inference based on analytically simulated observable diagramsM. Kosiba, N. Cerardi, M. Pierre, and 4 more authorsAstronomy and Astrophysics Jan 2025

Context. The number density of galaxy clusters across mass and redshift has been established as a powerful cosmological probe, yielding important information on the matter components of the Universe. Cosmological analyses with galaxy clusters traditionally employ scaling relations, which are empirical relationships between cluster masses and their observable properties. However, many challenges arise from this approach as the scaling relations are highly scattered, maybe ill-calibrated, depend on the cosmology, and contain many nuisance parameters with low physical significance. Aims. For this paper, we used a simulation-based inference method utilizing artificial neural networks to optimally extract cosmological information from a shallow X-ray survey, solely using count rates, hardness ratios, and redshifts. This procedure enabled us to conduct likelihood-free inference of cosmological parameters \ensuremathΩ_m and \ensuremathσ_8. Methods. To achieve this, we analytically generated several datasets of 70 000 cluster samples with totally random combinations of cosmological and scaling relation parameters. Each sample in our simulation is represented by its galaxy cluster distribution in a count rate (CR) and hardness ratio (HR) space in multiple redshift bins. We trained convolutional neural networks (CNNs) to retrieve the cosmological parameters from these distributions. We then used neural density estimation (NDE) neural networks to predict the posterior probability distribution of \ensuremathΩ_m and \ensuremathσ_8 given an input galaxy cluster sample. Results. Using the survey area as a proxy for the number of clusters detected for fixed cosmological and astrophysical parameters, and hence of the Poissonian noise, we analyze various survey sizes. The 1\ensuremathσ errors of our density estimator on one of the target testing simulations are 1000 deg^2, 15.2% for \ensuremathΩ_m and 10.0% for \ensuremathσ_8; and 10 000 deg^2, 9.6% for \ensuremathΩ_m and 5.6% for \ensuremathσ_8. We also compare our results with a traditional Fisher analysis and explore the effect of an additional constraint on the redshift distribution of the simulated samples. Conclusions. We demonstrate, as a proof of concept, that it is possible to calculate cosmological predictions of \ensuremathΩ_m and \ensuremathσ_8 from a galaxy cluster population without explicitly computing cluster masses and even the scaling relation coefficients, thus avoiding potential biases resulting from such a procedure.

@article{2025A&A...693A..46K, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2025A&A...693A..46K}, archiveprefix = {arXiv}, author = {{Kosiba}, M. and {Cerardi}, N. and {Pierre}, M. and {Lanusse}, F. and {Garrel}, C. and {Werner}, N. and {Shalak}, M.}, doi = {10.1051/0004-6361/202450499}, eid = {A46}, eprint = {2409.06001}, journal = {Astronomy and Astrophysics}, keywords = {galaxies: clusters: general, cosmological parameters, cosmology: observations, Astrophysics - Cosmology and Nongalactic Astrophysics}, month = jan, pages = {A46}, primaryclass = {astro-ph.CO}, title = {{The cosmological analysis of X-ray cluster surveys: VI. Inference based on analytically simulated observable diagrams}}, volume = {693}, year = {2025} }

2024

-

The Multimodal Universe: Enabling Large-Scale Machine Learning with 100TB of Astronomical Scientific DataThe Multimodal Universe Collaboration, Jeroen Audenaert, Micah Bowles, and 26 more authorsarXiv e-prints Dec 2024

The Multimodal Universe: Enabling Large-Scale Machine Learning with 100TB of Astronomical Scientific DataThe Multimodal Universe Collaboration, Jeroen Audenaert, Micah Bowles, and 26 more authorsarXiv e-prints Dec 2024We present the MULTIMODAL UNIVERSE, a large-scale multimodal dataset of scientific astronomical data, compiled specifically to facilitate machine learning research. Overall, the MULTIMODAL UNIVERSE contains hundreds of millions of astronomical observations, constituting 100 TB of multi-channel and hyper-spectral images, spectra, multivariate time series, as well as a wide variety of associated scientific measurements and “metadata”. In addition, we include a range of benchmark tasks representative of standard practices for machine learning methods in astrophysics. This massive dataset will enable the development of large multi-modal models specifically targeted towards scientific applications. All codes used to compile the MULTIMODAL UNIVERSE and a description of how to access the data is available at https://github.com/MultimodalUniverse/MultimodalUniverse

@article{2024arXiv241202527T, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv241202527T}, archiveprefix = {arXiv}, author = {{The Multimodal Universe Collaboration} and {Audenaert}, Jeroen and {Bowles}, Micah and {Boyd}, Benjamin M. and {Chemaly}, David and {Cherinka}, Brian and {Ciuc{\u{a}}}, Ioana and {Cranmer}, Miles and {Do}, Aaron and {Grayling}, Matthew and {Hayes}, Erin E. and {Hehir}, Tom and {Ho}, Shirley and {Huertas-Company}, Marc and {Iyer}, Kartheik G. and {Jablonska}, Maja and {Lanusse}, Francois and {Leung}, Henry W. and {Mandel}, Kaisey and {Mart{\'\i}nez-Galarza}, Juan Rafael and {Melchior}, Peter and {Meyer}, Lucas and {Parker}, Liam H. and {Qu}, Helen and {Shen}, Jeff and {Smith}, Michael J. and {Stone}, Connor and {Walmsley}, Mike and {Wu}, John F.}, doi = {10.48550/arXiv.2412.02527}, eid = {arXiv:2412.02527}, eprint = {2412.02527}, journal = {arXiv e-prints}, keywords = {Astrophysics - Instrumentation and Methods for Astrophysics, Astrophysics - Astrophysics of Galaxies, Astrophysics - Solar and Stellar Astrophysics}, month = dec, pages = {arXiv:2412.02527}, primaryclass = {astro-ph.IM}, title = {{The Multimodal Universe: Enabling Large-Scale Machine Learning with 100TB of Astronomical Scientific Data}}, year = {2024} } - The Multimodal Universe: 100 TB of Machine Learning Ready Astronomical DataEirini Angeloudi, Jeroen Audenaert, Micah Bowles, and 26 more authorsResearch Notes of the American Astronomical Society Dec 2024

We present the Multimodal Universe, a new framework collating over 100 TB of multimodal astronomical data for its first release, spanning images, spectra, time series, tabular and hyper- spectral data. This unified collection enables a wide variety of machine learning (ML) applications and research across astronomical domains. The dataset brings together observations from multiple surveys, facilities, and wavelength regimes, providing standardized access to diverse data types. By providing uniform access to this diverse data, the Multimodal Universe aims to accelerate the development of ML methods for observational astronomy that can work across the large differences in astronomical datasets. The framework is actively supported and is designed to be extended whilst enforcing minimal self consistent conventions making contributing data as simple and practical as possible.

@article{2024RNAAS...8..301A, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024RNAAS...8..301A}, author = {{Angeloudi}, Eirini and {Audenaert}, Jeroen and {Bowles}, Micah and {Boyd}, Benjamin M. and {Chemaly}, David and {Cherinka}, Brian and {Ciuc{\u{a}}}, Ioana and {Cranmer}, Miles and {Do}, Aaron and {Grayling}, Matthew and {Hayes}, Erin E. and {Hehir}, Tom and {Ho}, Shirley and {Huertas-Company}, Marc and {Iyer}, Kartheik G. and {Jablonska}, Maja and {Lanusse}, Francois and {Leung}, Henry W. and {Mandel}, Kaisey and {Mart{\'\i}nez-Galarza}, Juan Rafael and {Melchior}, Peter and {Meyer}, Lucas and {Parker}, Liam H. and {Qu}, Helen and {Shen}, Jeff and {Smith}, Michael J. and {Walmsley}, Mike and {Wu}, John F. and {Multimodal Universe Collaboration}}, doi = {10.3847/2515-5172/ad9a63}, eid = {301}, journal = {Research Notes of the American Astronomical Society}, keywords = {Astronomy data acquisition, Astronomy databases, 1860, 83}, month = dec, number = {12}, pages = {301}, title = {{The Multimodal Universe: 100 TB of Machine Learning Ready Astronomical Data}}, volume = {8}, year = {2024} } - Geometric deep learning for galaxy-halo connection: a case study for galaxy intrinsic alignmentsYesukhei Jagvaral, Francois Lanusse, and Rachel MandelbaumarXiv e-prints Sep 2024

Forthcoming cosmological imaging surveys, such as the Rubin Observatory LSST, require large-scale simulations encompassing realistic galaxy populations for a variety of scientific applications. Of particular concern is the phenomenon of intrinsic alignments (IA), whereby galaxies orient themselves towards overdensities, potentially introducing significant systematic biases in weak gravitational lensing analyses if they are not properly modeled. Due to computational constraints, simulating the intricate details of galaxy formation and evolution relevant to IA across vast volumes is impractical. As an alternative, we propose a Deep Generative Model trained on the IllustrisTNG-100 simulation to sample 3D galaxy shapes and orientations to accurately reproduce intrinsic alignments along with correlated scalar features. We model the cosmic web as a set of graphs, each graph representing a halo with nodes representing the subhalos/galaxies. The architecture consists of a SO(3) \times \mathbbR^n diffusion generative model, for galaxy orientations and n scalars, implemented with E(3) equivariant Graph Neural Networks that explicitly respect the Euclidean symmetries of our Universe. The model is able to learn and predict features such as galaxy orientations that are statistically consistent with the reference simulation. Notably, our model demonstrates the ability to jointly model Euclidean- valued scalars (galaxy sizes, shapes, and colors) along with non-Euclidean valued SO(3) quantities (galaxy orientations) that are governed by highly complex galactic physics at non-linear scales.

@article{2024arXiv240918761J, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv240918761J}, archiveprefix = {arXiv}, author = {{Jagvaral}, Yesukhei and {Lanusse}, Francois and {Mandelbaum}, Rachel}, doi = {10.48550/arXiv.2409.18761}, eid = {arXiv:2409.18761}, eprint = {2409.18761}, journal = {arXiv e-prints}, keywords = {Astrophysics - Astrophysics of Galaxies, Computer Science - Machine Learning}, month = sep, pages = {arXiv:2409.18761}, primaryclass = {astro-ph.GA}, title = {{Geometric deep learning for galaxy-halo connection: a case study for galaxy intrinsic alignments}}, year = {2024} } - Simulation-Based Inference Benchmark for LSST Weak Lensing CosmologyJustine Zeghal, Denise Lanzieri, François Lanusse, and 5 more authorsarXiv e-prints Sep 2024

Standard cosmological analysis, which relies on two-point statistics, fails to extract the full information of the data. This limits our ability to constrain with precision cosmological parameters. Thus, recent years have seen a paradigm shift from analytical likelihood-based to simulation-based inference. However, such methods require a large number of costly simulations. We focus on full-field inference, considered the optimal form of inference. Our objective is to benchmark several ways of conducting full-field inference to gain insight into the number of simulations required for each method. We make a distinction between explicit and implicit full-field inference. Moreover, as it is crucial for explicit full-field inference to use a differentiable forward model, we aim to discuss the advantages of having this property for the implicit approach. We use the sbi_lens package which provides a fast and differentiable log- normal forward model. This forward model enables us to compare explicit and implicit full-field inference with and without gradient. The former is achieved by sampling the forward model through the No U-Turns sampler. The latter starts by compressing the data into sufficient statistics and uses the Neural Likelihood Estimation algorithm and the one augmented with gradient. We perform a full-field analysis on LSST Y10 like weak lensing simulated mass maps. We show that explicit and implicit full-field inference yield consistent constraints. Explicit inference requires 630 000 simulations with our particular sampler corresponding to 400 independent samples. Implicit inference requires a maximum of 101 000 simulations split into 100 000 simulations to build sufficient statistics (this number is not fine tuned) and 1 000 simulations to perform inference. Additionally, we show that our way of exploiting the gradients does not significantly help implicit inference.

@article{2024arXiv240917975Z, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv240917975Z}, archiveprefix = {arXiv}, author = {{Zeghal}, Justine and {Lanzieri}, Denise and {Lanusse}, Fran{\c{c}}ois and {Boucaud}, Alexandre and {Louppe}, Gilles and {Aubourg}, Eric and {Bayer}, Adrian E. and {The LSST Dark Energy Science Collaboration}}, doi = {10.48550/arXiv.2409.17975}, eid = {arXiv:2409.17975}, eprint = {2409.17975}, journal = {arXiv e-prints}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Instrumentation and Methods for Astrophysics}, month = sep, pages = {arXiv:2409.17975}, primaryclass = {astro-ph.CO}, title = {{Simulation-Based Inference Benchmark for LSST Weak Lensing Cosmology}}, year = {2024} } - Teaching dark matter simulations to speak the halo languageShivam Pandey, Francois Lanusse, Chirag Modi, and 1 more authorarXiv e-prints Sep 2024

We develop a transformer-based conditional generative model for discrete point objects and their properties. We use it to build a model for populating cosmological simulations with gravitationally collapsed structures called dark matter halos. Specifically, we condition our model with dark matter distribution obtained from fast, approximate simulations to recover the correct three- dimensional positions and masses of individual halos. This leads to a first model that can recover the statistical properties of the halos at small scales to better than 3% level using an accelerated dark matter simulation. This trained model can then be applied to simulations with significantly larger volumes which would otherwise be computationally prohibitive with traditional simulations, and also provides a crucial missing link in making end-to-end differentiable cosmological simulations. The code, named GOTHAM (Generative cOnditional Transformer for Halo’s Auto-regressive Modeling) is publicly available at \textbackslashurl{https://github.com/shivampcosmo/GOTHAM}.

@article{2024arXiv240911401P, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv240911401P}, archiveprefix = {arXiv}, author = {{Pandey}, Shivam and {Lanusse}, Francois and {Modi}, Chirag and {Wandelt}, Benjamin D.}, doi = {10.48550/arXiv.2409.11401}, eid = {arXiv:2409.11401}, eprint = {2409.11401}, journal = {arXiv e-prints}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Instrumentation and Methods for Astrophysics}, month = sep, pages = {arXiv:2409.11401}, primaryclass = {astro-ph.CO}, title = {{Teaching dark matter simulations to speak the halo language}}, year = {2024} } - Optimal Neural Summarisation for Full-Field Weak Lensing Cosmological Implicit InferenceDenise Lanzieri, Justine Zeghal, T. Lucas Makinen, and 3 more authorsarXiv e-prints Jul 2024

Traditionally, weak lensing cosmological surveys have been analyzed using summary statistics motivated by their analytically tractable likelihoods, or by their ability to access higher- order information, at the cost of requiring Simulation-Based Inference (SBI) approaches. While informative, these statistics are neither designed nor guaranteed to be statistically sufficient. With the rise of deep learning, it becomes possible to create summary statistics optimized to extract the full data information. We compare different neural summarization strategies proposed in the weak lensing literature, to assess which loss functions lead to theoretically optimal summary statistics to perform full-field inference. In doing so, we aim to provide guidelines and insights to the community to help guide future neural-based inference analyses. We design an experimental setup to isolate the impact of the loss function used to train neural networks. We have developed the sbi_lens JAX package, which implements an automatically differentiable lognormal wCDM LSST-Y10 weak lensing simulator. The explicit full-field posterior obtained using the Hamilotnian-Monte-Carlo sampler gives us a ground truth to which to compare different compression strategies. We provide theoretical insight into the loss functions used in the literature and show that some do not necessarily lead to sufficient statistics (e.g. Mean Square Error (MSE)), while those motivated by information theory (e.g. Variational Mutual Information Maximization (VMIM)) can. Our numerical experiments confirm these insights and show, in our simulated wCDM scenario, that the Figure of Merit (FoM) of an analysis using neural summaries optimized under VMIM achieves 100% of the reference Omega_c - sigma_8 full-field FoM, while an analysis using neural summaries trained under MSE achieves only 81% of the same reference FoM.

@article{2024arXiv240710877L, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv240710877L}, archiveprefix = {arXiv}, author = {{Lanzieri}, Denise and {Zeghal}, Justine and {Makinen}, T. Lucas and {Boucaud}, Alexandre and {Starck}, Jean-Luc and {Lanusse}, Fran{\c{c}}ois}, doi = {10.48550/arXiv.2407.10877}, eid = {arXiv:2407.10877}, eprint = {2407.10877}, journal = {arXiv e-prints}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics}, month = jul, pages = {arXiv:2407.10877}, primaryclass = {astro-ph.CO}, title = {{Optimal Neural Summarisation for Full-Field Weak Lensing Cosmological Implicit Inference}}, year = {2024} } - AstroCLIP: a cross-modal foundation model for galaxiesLiam Parker, Francois Lanusse, Siavash Golkar, and 13 more authorsMonthly Notices of the Royal Astronomical Society Jul 2024

We present AstroCLIP, a single, versatile model that can embed both galaxy images and spectra into a shared, physically meaningful latent space. These embeddings can then be used - without any model fine-tuning - for a variety of downstream tasks including (1) accurate in-modality and cross-modality semantic similarity search, (2) photometric redshift estimation, (3) galaxy property estimation from both images and spectra, and (4) morphology classification. Our approach to implementing AstroCLIP consists of two parts. First, we embed galaxy images and spectra separately by pre-training separate transformer-based image and spectrum encoders in self-supervised settings. We then align the encoders using a contrastive loss. We apply our method to spectra from the Dark Energy Spectroscopic Instrument and images from its corresponding Legacy Imaging Survey. Overall, we find remarkable performance on all downstream tasks, even relative to supervised baselines. For example, for a task like photometric redshift prediction, we find similar performance to a specifically trained ResNet18, and for additional tasks like physical property estimation (stellar mass, age, metallicity, and specific-star-formation rate), we beat this supervised baseline by 19 per cent in terms of R^2. We also compare our results with a state-of-the-art self-supervised single-modal model for galaxy images, and find that our approach outperforms this benchmark by roughly a factor of two on photometric redshift estimation and physical property prediction in terms of R^2, while remaining roughly in-line in terms of morphology classification. Ultimately, our approach represents the first cross-modal self-supervised model for galaxies, and the first self-supervised transformer-based architectures for galaxy images and spectra.

@article{2024MNRAS.531.4990P, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024MNRAS.531.4990P}, archiveprefix = {arXiv}, author = {{Parker}, Liam and {Lanusse}, Francois and {Golkar}, Siavash and {Sarra}, Leopoldo and {Cranmer}, Miles and {Bietti}, Alberto and {Eickenberg}, Michael and {Krawezik}, Geraud and {McCabe}, Michael and {Morel}, Rudy and {Ohana}, Ruben and {Pettee}, Mariel and {R{\'e}galdo-Saint Blancard}, Bruno and {Cho}, Kyunghyun and {Ho}, Shirley and {Polymathic AI Collaboration}}, doi = {10.1093/mnras/stae1450}, eprint = {2310.03024}, journal = {Monthly Notices of the Royal Astronomical Society}, keywords = {Astrophysics - Instrumentation and Methods for Astrophysics, Computer Science - Artificial Intelligence, Computer Science - Machine Learning}, month = jul, number = {4}, pages = {4990-5011}, primaryclass = {astro-ph.IM}, title = {{AstroCLIP: a cross-modal foundation model for galaxies}}, volume = {531}, year = {2024} } - Detecting galaxy tidal features using self-supervised representation learningAlice Desmons, Sarah Brough, and Francois LanusseMonthly Notices of the Royal Astronomical Society Jul 2024

Low surface brightness substructures around galaxies, known as tidal features, are a valuable tool in the detection of past or ongoing galaxy mergers, and their properties can answer questions about the progenitor galaxies involved in the interactions. The assembly of current tidal feature samples is primarily achieved using visual classification, making it difficult to construct large samples and draw accurate and statistically robust conclusions about the galaxy evolution process. With upcoming large optical imaging surveys such as the Vera C. Rubin Observatory’s Legacy Survey of Space and Time, predicted to observe billions of galaxies, it is imperative that we refine our methods of detecting and classifying samples of merging galaxies. This paper presents promising results from a self-supervised machine learning model, trained on data from the Ultradeep layer of the Hyper Suprime-Cam Subaru Strategic Program optical imaging survey, designed to automate the detection of tidal features. We find that self-supervised models are capable of detecting tidal features, and that our model outperforms previous automated tidal feature detection methods, including a fully supervised model. An earlier method applied to real galaxy images achieved 76 per cent completeness for 22 per cent contamination, while our model achieves considerably higher (96 per cent) completeness for the same level of contamination. We emphasize a number of advantages of self-supervised models over fully supervised models including maintaining excellent performance when using only 50 labelled examples for training, and the ability to perform similarity searches using a single example of a galaxy with tidal features.

@article{2024MNRAS.531.4070D, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024MNRAS.531.4070D}, archiveprefix = {arXiv}, author = {{Desmons}, Alice and {Brough}, Sarah and {Lanusse}, Francois}, doi = {10.1093/mnras/stae1402}, eprint = {2307.04967}, journal = {Monthly Notices of the Royal Astronomical Society}, keywords = {Astrophysics - Astrophysics of Galaxies, Astrophysics - Instrumentation and Methods for Astrophysics}, month = jul, number = {4}, pages = {4070-4084}, primaryclass = {astro-ph.GA}, title = {{Detecting galaxy tidal features using self-supervised representation learning}}, volume = {531}, year = {2024} } - An Empirical Model For Intrinsic Alignments: Insights From Cosmological SimulationsNicholas Van Alfen, Duncan Campbell, Jonathan Blazek, and 5 more authorsThe Open Journal of Astrophysics Jun 2024

We extend current models of the halo occupation distribution (HOD) to include a flexible, empirical framework for the forward modeling of the intrinsic alignment (IA) of galaxies. A primary goal of this work is to produce mock galaxy catalogs for the purpose of validating existing models and methods for the mitigation of IA in weak lensing measurements. This technique can also be used to produce new, simulation-based predictions for IA and galaxy clustering. Our model is probabilistically formulated, and rests upon the assumption that the orientations of galaxies exhibit a correlation with their host dark matter (sub)halo orientation or with their position within the halo. We examine the necessary components and phenomenology of such a model by considering the alignments between (sub)halos in a cosmological dark matter only simulation. We then validate this model for a realistic galaxy population in a set of simulations in the Illustris-TNG suite. We create an HOD mock with Illustris-like correlations using our method, constraining the associated IA model parameters, with the between our model’s correlations and those of Illustris matching as closely as 1.4 and 1.1 for orientation–position and orientation–orientation correlation functions, respectively. By modeling the misalignment between galaxies and their host halo, we show that the 3-dimensional two-point position and orientation correlation functions of simulated (sub)halos and galaxies can be accurately reproduced from quasi- linear scales down to . We also find evidence for environmental influence on IA within a halo. Our publicly-available software provides a key component enabling efficient determination of Bayesian posteriors on IA model parameters using observational measurements of galaxy-orientation correlation functions in the highly nonlinear regime.

@article{2024OJAp....7E..45V, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024OJAp....7E..45V}, archiveprefix = {arXiv}, author = {{Van Alfen}, Nicholas and {Campbell}, Duncan and {Blazek}, Jonathan and {Leonard}, C. Danielle and {Lanusse}, Francois and {Hearin}, Andrew and {Mandelbaum}, Rachel and {LSST Dark Energy Science Collaboration}}, doi = {10.33232/001c.118783}, eid = {45}, eprint = {2311.07374}, journal = {The Open Journal of Astrophysics}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics}, month = jun, pages = {45}, primaryclass = {astro-ph.CO}, title = {{An Empirical Model For Intrinsic Alignments: Insights From Cosmological Simulations}}, volume = {7}, year = {2024} } - Learning Diffusion Priors from Observations by Expectation MaximizationFrançois Rozet, Gérôme Andry, François Lanusse, and 1 more authorarXiv e-prints May 2024

Diffusion models recently proved to be remarkable priors for Bayesian inverse problems. However, training these models typically requires access to large amounts of clean data, which could prove difficult in some settings. In this work, we present a novel method based on the expectation-maximization algorithm for training diffusion models from incomplete and noisy observations only. Unlike previous works, our method leads to proper diffusion models, which is crucial for downstream tasks. As part of our method, we propose and motivate an improved posterior sampling scheme for unconditional diffusion models. We present empirical evidence supporting the effectiveness of our method.

@article{2024arXiv240513712R, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv240513712R}, archiveprefix = {arXiv}, author = {{Rozet}, Fran{\c{c}}ois and {Andry}, G{\'e}r{\^o}me and {Lanusse}, Fran{\c{c}}ois and {Louppe}, Gilles}, doi = {10.48550/arXiv.2405.13712}, eid = {arXiv:2405.13712}, eprint = {2405.13712}, journal = {arXiv e-prints}, keywords = {Computer Science - Machine Learning, Statistics - Machine Learning}, month = may, pages = {arXiv:2405.13712}, primaryclass = {cs.LG}, title = {{Learning Diffusion Priors from Observations by Expectation Maximization}}, year = {2024} } - Differentiable stochastic halo occupation distributionBenjamin Horowitz, ChangHoon Hahn, Francois Lanusse, and 2 more authorsMonthly Notices of the Royal Astronomical Society Apr 2024

In this work, we demonstrate how differentiable stochastic sampling techniques developed in the context of deep reinforcement learning can be used to perform efficient parameter inference over stochastic, simulation-based, forward models. As a particular example, we focus on the problem of estimating parameters of halo occupation distribution (HOD) models that are used to connect galaxies with their dark matter haloes. Using a combination of continuous relaxation and gradient re- parametrization techniques, we can obtain well-defined gradients with respect to HOD parameters through discrete galaxy catalogue realizations. Having access to these gradients allows us to leverage efficient sampling schemes, such as Hamiltonian Monte Carlo, and greatly speed up parameter inference. We demonstrate our technique on a mock galaxy catalogue generated from the Bolshoi simulation using a standard HOD model and find near- identical posteriors as standard Markov chain Monte Carlo techniques with an increase of \raisebox-0.5ex\textasciitilde8\texttimes in convergence efficiency. Our differentiable HOD model also has broad applications in full forward model approaches to cosmic structure and cosmological analysis.

@article{2024MNRAS.529.2473H, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024MNRAS.529.2473H}, archiveprefix = {arXiv}, author = {{Horowitz}, Benjamin and {Hahn}, ChangHoon and {Lanusse}, Francois and {Modi}, Chirag and {Ferraro}, Simone}, doi = {10.1093/mnras/stae350}, eprint = {2211.03852}, journal = {Monthly Notices of the Royal Astronomical Society}, keywords = {methods: numerical, galaxies: fundamental parameters, galaxies: haloes, cosmology: theory, Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Astrophysics of Galaxies}, month = apr, number = {3}, pages = {2473-2482}, primaryclass = {astro-ph.CO}, title = {{Differentiable stochastic halo occupation distribution}}, volume = {529}, year = {2024} } - Differentiable Cosmological Simulation with the Adjoint MethodYin Li, Chirag Modi, Drew Jamieson, and 5 more authorsAstrophysical Journal, Supplement Feb 2024

Rapid advances in deep learning have brought not only a myriad of powerful neural networks, but also breakthroughs that benefit established scientific research. In particular, automatic differentiation (AD) tools and computational accelerators like GPUs have facilitated forward modeling of the Universe with differentiable simulations. Based on analytic or automatic backpropagation, current differentiable cosmological simulations are limited by memory, and thus are subject to a trade-off between time and space/mass resolution, usually sacrificing both. We present a new approach free of such constraints, using the adjoint method and reverse time integration. It enables larger and more accurate forward modeling at the field level, and will improve gradient-based optimization and inference. We implement it in an open-source particle-mesh (PM) N-body library pmwd (PM with derivatives). Based on the powerful AD system JAX, pmwd is fully differentiable, and is highly performant on GPUs.

@article{2024ApJS..270...36L, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024ApJS..270...36L}, archiveprefix = {arXiv}, author = {{Li}, Yin and {Modi}, Chirag and {Jamieson}, Drew and {Zhang}, Yucheng and {Lu}, Libin and {Feng}, Yu and {Lanusse}, Fran{\c{c}}ois and {Greengard}, Leslie}, doi = {10.3847/1538-4365/ad0ce7}, eid = {36}, eprint = {2211.09815}, journal = {Astrophysical Journal, Supplement}, keywords = {Cosmology, Large-scale structure of the universe, N-body simulations, Astronomy software, Computational methods, Algorithms, 343, 902, 1083, 1855, 1965, 1883, Astrophysics - Instrumentation and Methods for Astrophysics, Astrophysics - Cosmology and Nongalactic Astrophysics}, month = feb, number = {2}, pages = {36}, primaryclass = {astro-ph.IM}, title = {{Differentiable Cosmological Simulation with the Adjoint Method}}, volume = {270}, year = {2024} }

2023

- Unified framework for diffusion generative models in SO(3): applications in computer vision and astrophysicsYesukhei Jagvaral, Francois Lanusse, and Rachel MandelbaumarXiv e-prints Dec 2023

Diffusion-based generative models represent the current state-of-the-art for image generation. However, standard diffusion models are based on Euclidean geometry and do not translate directly to manifold-valued data. In this work, we develop extensions of both score-based generative models (SGMs) and Denoising Diffusion Probabilistic Models (DDPMs) to the Lie group of 3D rotations, SO(3). SO(3) is of particular interest in many disciplines such as robotics, biochemistry and astronomy/cosmology science. Contrary to more general Riemannian manifolds, SO(3) admits a tractable solution to heat diffusion, and allows us to implement efficient training of diffusion models. We apply both SO(3) DDPMs and SGMs to synthetic densities on SO(3) and demonstrate state-of-the-art results. Additionally, we demonstrate the practicality of our model on pose estimation tasks and in predicting correlated galaxy orientations for astrophysics/cosmology.

@article{2023arXiv231211707J, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023arXiv231211707J}, archiveprefix = {arXiv}, author = {{Jagvaral}, Yesukhei and {Lanusse}, Francois and {Mandelbaum}, Rachel}, doi = {10.48550/arXiv.2312.11707}, eid = {arXiv:2312.11707}, eprint = {2312.11707}, journal = {arXiv e-prints}, keywords = {Computer Science - Machine Learning, Computer Science - Computer Vision and Pattern Recognition}, month = dec, pages = {arXiv:2312.11707}, primaryclass = {cs.LG}, title = {{Unified framework for diffusion generative models in SO(3): applications in computer vision and astrophysics}}, year = {2023} } - Forecasting the power of higher order weak-lensing statistics with automatically differentiable simulationsDenise Lanzieri, François Lanusse, Chirag Modi, and 4 more authorsAstronomy and Astrophysics Nov 2023

\Aims: We present the fully differentiable physical Differentiable Lensing Lightcone (DLL) model, designed for use as a forward model in Bayesian inference algorithms that require access to derivatives of lensing observables with respect to cosmological parameters. \Methods: We extended the public FlowPM N-body code, a particle-mesh N-body solver, while simulating the lensing lightcones and implementing the Born approximation in the Tensorflow framework. Furthermore, DLL is aimed at achieving high accuracy with low computational costs. As such, it integrates a novel hybrid physical-neural (HPN) parameterization that is able to compensate for the small-scale approximations resulting from particle-mesh schemes for cosmological N-body simulations. We validated our simulations in the context of the Vera C. Rubin Observatory’s Legacy Survey of Space and Time (LSST) against high-resolution \ensuremathκTNG-Dark simulations by comparing both the lensing angular power spectrum and multiscale peak counts. We demonstrated its ability to recover lensing C_\ensuremath\ell up to a 10% accuracy at \ensuremath\ell = 1000 for sources at a redshift of 1, with as few as \ensuremath∼0.6 particles per Mpc h^\ensuremath-1. As a first-use case, we applied this tool to an investigation of the relative constraining power of the angular power spectrum and peak counts statistic in an LSST setting. Such comparisons are typically very costly as they require a large number of simulations and do not scale appropriately with an increasing number of cosmological parameters. As opposed to forecasts based on finite differences, these statistics can be analytically differentiated with respect to cosmology or any systematics included in the simulations at the same computational cost of the forward simulation. \Results: We find that the peak counts outperform the power spectrum in terms of the cold dark matter parameter, \ensuremathΩ_c, as well as on the amplitude of density fluctuations, \ensuremathσ_8, and the amplitude of the intrinsic alignment signal, A_IA.

@article{2023A&A...679A..61L, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023A&A...679A..61L}, archiveprefix = {arXiv}, author = {{Lanzieri}, Denise and {Lanusse}, Fran{\c{c}}ois and {Modi}, Chirag and {Horowitz}, Benjamin and {Harnois-D{\'e}raps}, Joachim and {Starck}, Jean-Luc and {LSST Dark Energy Science Collaboration (LSST DESC)}}, doi = {10.1051/0004-6361/202346888}, eid = {A61}, eprint = {2305.07531}, journal = {Astronomy and Astrophysics}, keywords = {methods: statistical, cosmology: large-scale structure of Universe, gravitational lensing: weak, Astrophysics - Instrumentation and Methods for Astrophysics, Astrophysics - Cosmology and Nongalactic Astrophysics}, month = nov, pages = {A61}, primaryclass = {astro-ph.IM}, title = {{Forecasting the power of higher order weak-lensing statistics with automatically differentiable simulations}}, volume = {679}, year = {2023} } - Multiple Physics Pretraining for Physical Surrogate ModelsMichael McCabe, Bruno Régaldo-Saint Blancard, Liam Holden Parker, and 11 more authorsarXiv e-prints Oct 2023

We introduce multiple physics pretraining (MPP), an autoregressive task- agnostic pretraining approach for physical surrogate modeling of spatiotemporal systems with transformers. In MPP, rather than training one model on a specific physical system, we train a backbone model to predict the dynamics of multiple heterogeneous physical systems simultaneously in order to learn features that are broadly useful across systems and facilitate transfer. In order to learn effectively in this setting, we introduce a shared embedding and normalization strategy that projects the fields of multiple systems into a shared embedding space. We validate the efficacy of our approach on both pretraining and downstream tasks over a broad fluid mechanics-oriented benchmark. We show that a single MPP-pretrained transformer is able to match or outperform task-specific baselines on all pretraining sub-tasks without the need for finetuning. For downstream tasks, we demonstrate that finetuning MPP-trained models results in more accurate predictions across multiple time-steps on systems with previously unseen physical components or higher dimensional systems compared to training from scratch or finetuning pretrained video foundation models. We open-source our code and model weights trained at multiple scales for reproducibility.

@article{2023arXiv231002994M, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023arXiv231002994M}, archiveprefix = {arXiv}, author = {{McCabe}, Michael and {R{\'e}galdo-Saint Blancard}, Bruno and {Holden Parker}, Liam and {Ohana}, Ruben and {Cranmer}, Miles and {Bietti}, Alberto and {Eickenberg}, Michael and {Golkar}, Siavash and {Krawezik}, Geraud and {Lanusse}, Francois and {Pettee}, Mariel and {Tesileanu}, Tiberiu and {Cho}, Kyunghyun and {Ho}, Shirley}, doi = {10.48550/arXiv.2310.02994}, eid = {arXiv:2310.02994}, eprint = {2310.02994}, journal = {arXiv e-prints}, keywords = {Computer Science - Machine Learning, Computer Science - Artificial Intelligence, Statistics - Machine Learning}, month = oct, pages = {arXiv:2310.02994}, primaryclass = {cs.LG}, title = {{Multiple Physics Pretraining for Physical Surrogate Models}}, year = {2023} } - xVal: A Continuous Numerical Tokenization for Scientific Language ModelsSiavash Golkar, Mariel Pettee, Michael Eickenberg, and 11 more authorsarXiv e-prints Oct 2023

Due in part to their discontinuous and discrete default encodings for numbers, Large Language Models (LLMs) have not yet been commonly used to process numerically-dense scientific datasets. Rendering datasets as text, however, could help aggregate diverse and multi-modal scientific data into a single training corpus, thereby potentially facilitating the development of foundation models for science. In this work, we introduce xVal, a strategy for continuously tokenizing numbers within language models that results in a more appropriate inductive bias for scientific applications. By training specially-modified language models from scratch on a variety of scientific datasets formatted as text, we find that xVal generally outperforms other common numerical tokenization strategies on metrics including out-of- distribution generalization and computational efficiency.

@article{2023arXiv231002989G, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023arXiv231002989G}, archiveprefix = {arXiv}, author = {{Golkar}, Siavash and {Pettee}, Mariel and {Eickenberg}, Michael and {Bietti}, Alberto and {Cranmer}, Miles and {Krawezik}, Geraud and {Lanusse}, Francois and {McCabe}, Michael and {Ohana}, Ruben and {Parker}, Liam and {R{\'e}galdo-Saint Blancard}, Bruno and {Tesileanu}, Tiberiu and {Cho}, Kyunghyun and {Ho}, Shirley}, doi = {10.48550/arXiv.2310.02989}, eid = {arXiv:2310.02989}, eprint = {2310.02989}, journal = {arXiv e-prints}, keywords = {Statistics - Machine Learning, Computer Science - Artificial Intelligence, Computer Science - Computation and Language, Computer Science - Machine Learning}, month = oct, pages = {arXiv:2310.02989}, primaryclass = {stat.ML}, title = {{xVal: A Continuous Numerical Tokenization for Scientific Language Models}}, year = {2023} } - Detecting Tidal Features using Self-Supervised LearningAlice Desmons, Sarah Brough, and Francois LanusseIn Machine Learning for Astrophysics Jul 2023

Low surface brightness substructures around galaxies, known as tidal features, are a valuable tool in the detection of past or ongoing galaxy mergers, and their properties can answer questions about the progenitor galaxies involved in the interactions. The assembly of current tidal feature samples is primarily achieved using visual classification, making it difficult to construct large samples and draw accurate and statistically robust conclusions about the galaxy evolution process. With upcoming large optical imaging surveys such as the Vera C. Rubin Observatory Legacy Survey of Space and Time (LSST), predicted to observe billions of galaxies, it is imperative that we refine our methods of detecting and classifying samples of merging galaxies. This paper presents promising results from a self-supervised machine learning model, trained on data from the Ultradeep layer of the Hyper Suprime- Cam Subaru Strategic Program optical imaging survey, designed to automate the detection of tidal features. We find that self- supervised models are capable of detecting tidal features, and that our model outperforms previous automated tidal feature detection methods, including a fully supervised model. An earlier method applied to real galaxy images achieved 76% completeness for 22% contamination, while our model achieves considerably higher (96%) completeness for the same level of contamination. We emphasise a number of advantages of self- supervised models over fully supervised models including maintaining excellent performance when using only 50 labelled examples for training, and the ability to perform similarity searches using a single example of a galaxy with tidal features.

@inproceedings{2023mla..confE..11D, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023mla..confE..11D}, archiveprefix = {arXiv}, author = {{Desmons}, Alice and {Brough}, Sarah and {Lanusse}, Francois}, booktitle = {Machine Learning for Astrophysics}, doi = {10.48550/arXiv.2308.07962}, eid = {11}, eprint = {2308.07962}, keywords = {Astrophysics - Astrophysics of Galaxies, Astrophysics - Instrumentation and Methods for Astrophysics}, month = jul, pages = {11}, primaryclass = {astro-ph.GA}, title = {{Detecting Tidal Features using Self-Supervised Learning}}, year = {2023} } - JAX-COSMO: An End-to-End Differentiable and GPU Accelerated Cosmology LibraryJean-Eric Campagne, François Lanusse, Joe Zuntz, and 7 more authorsThe Open Journal of Astrophysics Apr 2023

We present jax-cosmo, a library for automatically differentiable cosmological theory calculations. It uses the JAX library, which has created a new coding ecosystem, especially in probabilistic programming. As well as batch acceleration, just-in-time compilation, and automatic optimization of code for different hardware modalities (CPU, GPU, TPU), JAX exposes an automatic differentiation (autodiff) mechanism. Thanks to autodiff, jax- cosmo gives access to the derivatives of cosmological likelihoods with respect to any of their parameters, and thus enables a range of powerful Bayesian inference algorithms, otherwise impractical in cosmology, such as Hamiltonian Monte Carlo and Variational Inference. In its initial release, jax- cosmo implements background evolution, linear and non-linear power spectra (using halofit or the Eisenstein and Hu transfer function), as well as angular power spectra with the Limber approximation for galaxy and weak lensing probes, all differentiable with respect to the cosmological parameters and their other inputs. We illustrate how autodiff can be a game- changer for common tasks involving Fisher matrix computations, or full posterior inference with gradient-based techniques. In particular, we show how Fisher matrices are now fast, exact, no longer require any fine tuning, and are themselves differentiable. Finally, using a Dark Energy Survey Year 1 3x2pt analysis as a benchmark, we demonstrate how jax-cosmo can be combined with Probabilistic Programming Languages to perform posterior inference with state-of-the-art algorithms including a No U-Turn Sampler, Automatic Differentiation Variational Inference,and Neural Transport HMC. We further demonstrate that Normalizing Flows using Neural Transport are a promising methodology for model validation in the early stages of analysis.

@article{2023OJAp....6E..15C, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023OJAp....6E..15C}, archiveprefix = {arXiv}, author = {{Campagne}, Jean-Eric and {Lanusse}, Fran{\c{c}}ois and {Zuntz}, Joe and {Boucaud}, Alexandre and {Casas}, Santiago and {Karamanis}, Minas and {Kirkby}, David and {Lanzieri}, Denise and {Peel}, Austin and {Li}, Yin}, doi = {10.21105/astro.2302.05163}, eid = {15}, eprint = {2302.05163}, journal = {The Open Journal of Astrophysics}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Instrumentation and Methods for Astrophysics}, month = apr, pages = {15}, primaryclass = {astro-ph.CO}, title = {{JAX-COSMO: An End-to-End Differentiable and GPU Accelerated Cosmology Library}}, volume = {6}, year = {2023} } -

Probabilistic mass-mapping with neural score estimationB. Remy, F. Lanusse, N. Jeffrey, and 4 more authorsAstronomy and Astrophysics Apr 2023

Probabilistic mass-mapping with neural score estimationB. Remy, F. Lanusse, N. Jeffrey, and 4 more authorsAstronomy and Astrophysics Apr 2023Context. Weak lensing mass-mapping is a useful tool for accessing the full distribution of dark matter on the sky, but because of intrinsic galaxy ellipticies, finite fields, and missing data, the recovery of dark matter maps constitutes a challenging, ill- posed inverse problem \Aims: We introduce a novel methodology that enables the efficient sampling of the high- dimensional Bayesian posterior of the weak lensing mass-mapping problem, relying on simulations to define a fully non-Gaussian prior. We aim to demonstrate the accuracy of the method to simulated fields, and then proceed to apply it to the mass reconstruction of the HST/ACS COSMOS field. \Methods: The proposed methodology combines elements of Bayesian statistics, analytic theory, and a recent class of deep generative models based on neural score matching. This approach allows us to make full use of analytic cosmological theory to constrain the 2pt statistics of the solution, to understand any differences between this analytic prior and full simulations from cosmological simulations, and to obtain samples from the full Bayesian posterior of the problem for robust uncertainty quantification. \Results: We demonstrate the method in the \ensuremathκTNG simulations and find that the posterior mean significantly outperfoms previous methods (Kaiser-Squires, Wiener filter, Sparsity priors) both for the root-mean-square error and in terms of the Pearson correlation. We further illustrate the interpretability of the recovered posterior by establishing a close correlation between posterior convergence values and the S/N of the clusters artificially introduced into a field. Finally, we apply the method to the reconstruction of the HST/ACS COSMOS field, which yields the highest-quality convergence map of this field to date. \Conclusions: We find the proposed approach to be superior to previous algorithms, scalable, providing uncertainties, and using a fully non-Gaussian prior. \\textbackslashAll codes and data products associated with this paper are available at <A href=“https://github.com/CosmoStat/jax- lensing”>https://github.com/CosmoStat/jax-lensing</A>.

@article{2023A&A...672A..51R, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023A&A...672A..51R}, archiveprefix = {arXiv}, author = {{Remy}, B. and {Lanusse}, F. and {Jeffrey}, N. and {Liu}, J. and {Starck}, J. -L. and {Osato}, K. and {Schrabback}, T.}, doi = {10.1051/0004-6361/202243054}, eid = {A51}, eprint = {2201.05561}, journal = {Astronomy and Astrophysics}, keywords = {cosmology: observations, methods: statistical, gravitational lensing: weak, Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Instrumentation and Methods for Astrophysics, Computer Science - Machine Learning}, month = apr, pages = {A51}, primaryclass = {astro-ph.CO}, title = {{Probabilistic mass-mapping with neural score estimation}}, volume = {672}, year = {2023} } - The N5K Challenge: Non-Limber Integration for LSST CosmologyC. Danielle Leonard, Tassia Ferreira, Xiao Fang, and 8 more authorsThe Open Journal of Astrophysics Feb 2023

The rapidly increasing statistical power of cosmological imaging surveys requires us to reassess the regime of validity for various approximations that accelerate the calculation of relevant theoretical predictions. In this paper, we present the results of the ’N5K non-Limber integration challenge’, the goal of which was to quantify the performance of different approaches to calculating the angular power spectrum of galaxy number counts and cosmic shear data without invoking the so-called ’Limber approximation’, in the context of the Rubin Observatory Legacy Survey of Space and Time (LSST). We quantify the performance, in terms of accuracy and speed, of three non-Limber implementations: \tt FKEM (CosmoLike), \tt Levin, and \tt matter, themselves based on different integration schemes and approximations. We find that in the challenge’s fiducial 3x2pt LSST Year 10 scenario, \tt FKEM (CosmoLike) produces the fastest run time within the required accuracy by a considerable margin, positioning it favourably for use in Bayesian parameter inference. This method, however, requires further development and testing to extend its use to certain analysis scenarios, particularly those involving a scale- dependent growth rate. For this and other reasons discussed herein, alternative approaches such as \tt matter and \tt Levin may be necessary for a full exploration of parameter space. We also find that the usual first-order Limber approximation is insufficiently accurate for LSST Year 10 3x2pt analysis on \ell=200-1000, whereas invoking the second-order Limber approximation on these scales (with a full non-Limber method at smaller \ell) does suffice.

@article{2023OJAp....6E...8L, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023OJAp....6E...8L}, archiveprefix = {arXiv}, author = {{Leonard}, C. Danielle and {Ferreira}, Tassia and {Fang}, Xiao and {Reischke}, Robert and {Schoeneberg}, Nils and {Tr{\"o}ster}, Tilman and {Alonso}, David and {Campagne}, Jean-Eric and {Lanusse}, Fran{\c{c}}ois and {Slosar}, An{\v{z}}e and {Ishak}, Mustapha}, doi = {10.21105/astro.2212.04291}, eid = {8}, eprint = {2212.04291}, journal = {The Open Journal of Astrophysics}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics}, month = feb, pages = {8}, primaryclass = {astro-ph.CO}, title = {{The N5K Challenge: Non-Limber Integration for LSST Cosmology}}, volume = {6}, year = {2023} } -

The Dawes Review 10: The impact of deep learning for the analysis of galaxy surveysM. Huertas-Company, and F. LanussePublications of the Astron. Soc. of Australia Jan 2023

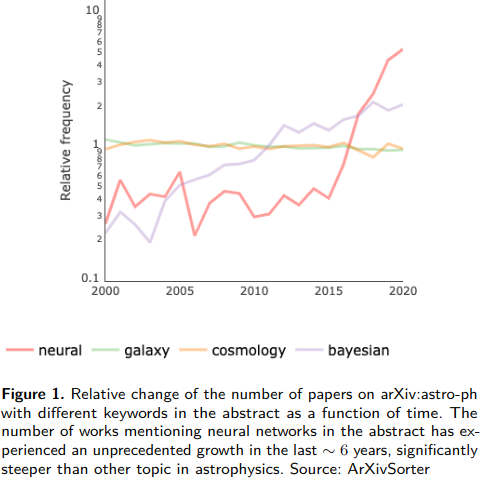

The Dawes Review 10: The impact of deep learning for the analysis of galaxy surveysM. Huertas-Company, and F. LanussePublications of the Astron. Soc. of Australia Jan 2023The amount and complexity of data delivered by modern galaxy surveys has been steadily increasing over the past years. New facilities will soon provide imaging and spectra of hundreds of millions of galaxies. Extracting coherent scientific information from these large and multi-modal data sets remains an open issue for the community and data-driven approaches such as deep learning have rapidly emerged as a potentially powerful solution to some long lasting challenges. This enthusiasm is reflected in an unprecedented exponential growth of publications using neural networks, which have gone from a handful of works in 2015 to an average of one paper per week in 2021 in the area of galaxy surveys. Half a decade after the first published work in astronomy mentioning deep learning, and shortly before new big data sets such as Euclid and LSST start becoming available, we believe it is timely to review what has been the real impact of this new technology in the field and its potential to solve key challenges raised by the size and complexity of the new datasets. The purpose of this review is thus two-fold. We first aim at summarising, in a common document, the main applications of deep learning for galaxy surveys that have emerged so far. We then extract the major achievements and lessons learned and highlight key open questions and limitations, which in our opinion, will require particular attention in the coming years. Overall, state-of-the-art deep learning methods are rapidly adopted by the astronomical community, reflecting a democratisation of these methods. This review shows that the majority of works using deep learning up to date are oriented to computer vision tasks (e.g. classification, segmentation). This is also the domain of application where deep learning has brought the most important breakthroughs so far. However, we also report that the applications are becoming more diverse and deep learning is used for estimating galaxy properties, identifying outliers or constraining the cosmological model. Most of these works remain at the exploratory level though which could partially explain the limited impact in terms of citations. Some common challenges will most likely need to be addressed before moving to the next phase of massive deployment of deep learning in the processing of future surveys; for example, uncertainty quantification, interpretability, data labelling and domain shift issues from training with simulations, which constitutes a common practice in astronomy.

@article{2023PASA...40....1H, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2023PASA...40....1H}, archiveprefix = {arXiv}, author = {{Huertas-Company}, M. and {Lanusse}, F.}, doi = {10.1017/pasa.2022.55}, eid = {e001}, eprint = {2210.01813}, journal = {Publications of the Astron. Soc. of Australia}, keywords = {methods: data analysis, cosmology: observations, cosmology: theory, galaxies: evolution, galaxies: formation, Astrophysics - Instrumentation and Methods for Astrophysics, Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Astrophysics of Galaxies}, month = jan, pages = {e001}, primaryclass = {astro-ph.IM}, title = {{The Dawes Review 10: The impact of deep learning for the analysis of galaxy surveys}}, volume = {40}, year = {2023} }

2022

- Modeling halo and central galaxy orientations on the SO(3) manifold with score-based generative modelsYesukhei Jagvaral, Rachel Mandelbaum, and Francois LanussearXiv e-prints Dec 2022

Upcoming cosmological weak lensing surveys are expected to constrain cosmological parameters with unprecedented precision. In preparation for these surveys, large simulations with realistic galaxy populations are required to test and validate analysis pipelines. However, these simulations are computationally very costly – and at the volumes and resolutions demanded by upcoming cosmological surveys, they are computationally infeasible. Here, we propose a Deep Generative Modeling approach to address the specific problem of emulating realistic 3D galaxy orientations in synthetic catalogs. For this purpose, we develop a novel Score-Based Diffusion Model specifically for the SO(3) manifold. The model accurately learns and reproduces correlated orientations of galaxies and dark matter halos that are statistically consistent with those of a reference high- resolution hydrodynamical simulation.

@article{2022arXiv221205592J, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022arXiv221205592J}, archiveprefix = {arXiv}, author = {{Jagvaral}, Yesukhei and {Mandelbaum}, Rachel and {Lanusse}, Francois}, doi = {10.48550/arXiv.2212.05592}, eid = {arXiv:2212.05592}, eprint = {2212.05592}, journal = {arXiv e-prints}, keywords = {Astrophysics - Astrophysics of Galaxies, Astrophysics - Cosmology and Nongalactic Astrophysics}, month = dec, pages = {arXiv:2212.05592}, primaryclass = {astro-ph.GA}, title = {{Modeling halo and central galaxy orientations on the SO(3) manifold with score-based generative models}}, year = {2022} } - pmwd: A Differentiable Cosmological Particle-Mesh N-body LibraryYin Li, Libin Lu, Chirag Modi, and 7 more authorsarXiv e-prints Nov 2022

The formation of the large-scale structure, the evolution and distribution of galaxies, quasars, and dark matter on cosmological scales, requires numerical simulations. Differentiable simulations provide gradients of the cosmological parameters, that can accelerate the extraction of physical information from statistical analyses of observational data. The deep learning revolution has brought not only myriad powerful neural networks, but also breakthroughs including automatic differentiation (AD) tools and computational accelerators like GPUs, facilitating forward modeling of the Universe with differentiable simulations. Because AD needs to save the whole forward evolution history to backpropagate gradients, current differentiable cosmological simulations are limited by memory. Using the adjoint method, with reverse time integration to reconstruct the evolution history, we develop a differentiable cosmological particle-mesh (PM) simulation library pmwd (particle-mesh with derivatives) with a low memory cost. Based on the powerful AD library JAX, pmwd is fully differentiable, and is highly performant on GPUs.

@article{2022arXiv221109958L, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022arXiv221109958L}, archiveprefix = {arXiv}, author = {{Li}, Yin and {Lu}, Libin and {Modi}, Chirag and {Jamieson}, Drew and {Zhang}, Yucheng and {Feng}, Yu and {Zhou}, Wenda and {Pok Kwan}, Ngai and {Lanusse}, Fran{\c{c}}ois and {Greengard}, Leslie}, doi = {10.48550/arXiv.2211.09958}, eid = {arXiv:2211.09958}, eprint = {2211.09958}, journal = {arXiv e-prints}, keywords = {Astrophysics - Instrumentation and Methods for Astrophysics, Astrophysics - Cosmology and Nongalactic Astrophysics}, month = nov, pages = {arXiv:2211.09958}, primaryclass = {astro-ph.IM}, title = {{pmwd: A Differentiable Cosmological Particle-Mesh $N$-body Library}}, year = {2022} } - Towards solving model bias in cosmic shear forward modelingBenjamin Remy, Francois Lanusse, and Jean-Luc StarckarXiv e-prints Oct 2022

As the volume and quality of modern galaxy surveys increase, so does the difficulty of measuring the cosmological signal imprinted in galaxy shapes. Weak gravitational lensing sourced by the most massive structures in the Universe generates a slight shearing of galaxy morphologies called cosmic shear, key probe for cosmological models. Modern techniques of shear estimation based on statistics of ellipticity measurements suffer from the fact that the ellipticity is not a well-defined quantity for arbitrary galaxy light profiles, biasing the shear estimation. We show that a hybrid physical and deep learning Hierarchical Bayesian Model, where a generative model captures the galaxy morphology, enables us to recover an unbiased estimate of the shear on realistic galaxies, thus solving the model bias.

@article{2022arXiv221016243R, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022arXiv221016243R}, archiveprefix = {arXiv}, author = {{Remy}, Benjamin and {Lanusse}, Francois and {Starck}, Jean-Luc}, doi = {10.48550/arXiv.2210.16243}, eid = {arXiv:2210.16243}, eprint = {2210.16243}, journal = {arXiv e-prints}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Instrumentation and Methods for Astrophysics, Statistics - Machine Learning}, month = oct, pages = {arXiv:2210.16243}, primaryclass = {astro-ph.CO}, title = {{Towards solving model bias in cosmic shear forward modeling}}, year = {2022} } - Galaxies and haloes on graph neural networks: Deep generative modelling scalar and vector quantities for intrinsic alignmentYesukhei Jagvaral, François Lanusse, Sukhdeep Singh, and 3 more authorsMonthly Notices of the Royal Astronomical Society Oct 2022

In order to prepare for the upcoming wide-field cosmological surveys, large simulations of the Universe with realistic galaxy populations are required. In particular, the tendency of galaxies to naturally align towards overdensities, an effect called intrinsic alignments (IA), can be a major source of systematics in the weak lensing analysis. As the details of galaxy formation and evolution relevant to IA cannot be simulated in practice on such volumes, we propose as an alternative a Deep Generative Model. This model is trained on the IllustrisTNG-100 simulation and is capable of sampling the orientations of a population of galaxies so as to recover the correct alignments. In our approach, we model the cosmic web as a set of graphs, where the graphs are constructed for each halo, and galaxy orientations as a signal on those graphs. The generative model is implemented on a Generative Adversarial Network architecture and uses specifically designed Graph- Convolutional Networks sensitive to the relative 3D positions of the vertices. Given (sub)halo masses and tidal fields, the model is able to learn and predict scalar features such as galaxy and dark matter subhalo shapes; and more importantly, vector features such as the 3D orientation of the major axis of the ellipsoid and the complex 2D ellipticities. For correlations of 3D orientations the model is in good quantitative agreement with the measured values from the simulation, except for at very small and transition scales. For correlations of 2D ellipticities, the model is in good quantitative agreement with the measured values from the simulation on all scales. Additionally, the model is able to capture the dependence of IA on mass, morphological type, and central/satellite type.

@article{2022MNRAS.516.2406J, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022MNRAS.516.2406J}, archiveprefix = {arXiv}, author = {{Jagvaral}, Yesukhei and {Lanusse}, Fran{\c{c}}ois and {Singh}, Sukhdeep and {Mandelbaum}, Rachel and {Ravanbakhsh}, Siamak and {Campbell}, Duncan}, doi = {10.1093/mnras/stac2083}, eprint = {2204.07077}, journal = {Monthly Notices of the Royal Astronomical Society}, keywords = {gravitational lensing: weak, methods: numerical, galaxies: statistics, galaxies: structure, cosmology: theory, Astrophysics - Astrophysics of Galaxies}, month = oct, number = {2}, pages = {2406-2419}, primaryclass = {astro-ph.GA}, title = {{Galaxies and haloes on graph neural networks: Deep generative modelling scalar and vector quantities for intrinsic alignment}}, volume = {516}, year = {2022} } - Bayesian uncertainty quantification for machine-learned models in physicsYarin Gal, Petros Koumoutsakos, Francois Lanusse, and 2 more authorsNature Reviews Physics Sep 2022

Being able to quantify uncertainty when comparing a theoretical or computational model to observations is critical to conducting a sound scientific investigation. With the rise of data-driven modelling, understanding various sources of uncertainty and developing methods to estimate them has gained renewed attention. Five researchers discuss uncertainty quantification in machine-learned models with an emphasis on issues relevant to physics problems.

@article{2022NatRP...4..573G, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022NatRP...4..573G}, author = {{Gal}, Yarin and {Koumoutsakos}, Petros and {Lanusse}, Francois and {Louppe}, Gilles and {Papadimitriou}, Costas}, doi = {10.1038/s42254-022-00498-4}, journal = {Nature Reviews Physics}, month = sep, number = {9}, pages = {573-577}, title = {{Bayesian uncertainty quantification for machine-learned models in physics}}, volume = {4}, year = {2022} } - From Data to Software to Science with the Rubin Observatory LSSTKatelyn Breivik, Andrew J. Connolly, K. E. Saavik Ford, and 97 more authorsarXiv e-prints Aug 2022

The Vera C. Rubin Observatory Legacy Survey of Space and Time (LSST) dataset will dramatically alter our understanding of the Universe, from the origins of the Solar System to the nature of dark matter and dark energy. Much of this research will depend on the existence of robust, tested, and scalable algorithms, software, and services. Identifying and developing such tools ahead of time has the potential to significantly accelerate the delivery of early science from LSST. Developing these collaboratively, and making them broadly available, can enable more inclusive and equitable collaboration on LSST science. To facilitate such opportunities, a community workshop entitled “From Data to Software to Science with the Rubin Observatory LSST” was organized by the LSST Interdisciplinary Network for Collaboration and Computing (LINCC) and partners, and held at the Flatiron Institute in New York, March 28-30th 2022. The workshop included over 50 in-person attendees invited from over 300 applications. It identified seven key software areas of need: (i) scalable cross-matching and distributed joining of catalogs, (ii) robust photometric redshift determination, (iii) software for determination of selection functions, (iv) frameworks for scalable time-series analyses, (v) services for image access and reprocessing at scale, (vi) object image access (cutouts) and analysis at scale, and (vii) scalable job execution systems. This white paper summarizes the discussions of this workshop. It considers the motivating science use cases, identified cross-cutting algorithms, software, and services, their high-level technical specifications, and the principles of inclusive collaborations needed to develop them. We provide it as a useful roadmap of needs, as well as to spur action and collaboration between groups and individuals looking to develop reusable software for early LSST science.

@article{2022arXiv220802781B, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022arXiv220802781B}, archiveprefix = {arXiv}, author = {{Breivik}, Katelyn and {Connolly}, Andrew J. and {Ford}, K.~E. Saavik and {Juri{\'c}}, Mario and {Mandelbaum}, Rachel and {Miller}, Adam A. and {Norman}, Dara and {Olsen}, Knut and {O'Mullane}, William and {Price-Whelan}, Adrian and {Sacco}, Timothy and {Sokoloski}, J.~L. and {Villar}, Ashley and {Acquaviva}, Viviana and {Ahumada}, Tomas and {AlSayyad}, Yusra and {Alves}, Catarina S. and {Andreoni}, Igor and {Anguita}, Timo and {Best}, Henry J. and {Bianco}, Federica B. and {Bonito}, Rosaria and {Bradshaw}, Andrew and {Burke}, Colin J. and {Rodrigues de Campos}, Andresa and {Cantiello}, Matteo and {Caplar}, Neven and {Chandler}, Colin Orion and {Chan}, James and {Nicolaci da Costa}, Luiz and {Danieli}, Shany and {Davenport}, James R.~A. and {Fabbian}, Giulio and {Fagin}, Joshua and {Gagliano}, Alexander and {Gall}, Christa and {Garavito Camargo}, Nicol{\'a}s and {Gawiser}, Eric and {Gezari}, Suvi and {Gomboc}, Andreja and {Gonzalez-Morales}, Alma X. and {Graham}, Matthew J. and {Gschwend}, Julia and {Guy}, Leanne P. and {Holman}, Matthew J. and {Hsieh}, Henry H. and {Hundertmark}, Markus and {Ili{\'c}}, Dragana and {Ishida}, Emille E.~O. and {Jurki{\'c}}, Tomislav and {Kannawadi}, Arun and {Kosakowski}, Alekzander and {Kova{\v{c}}evi{\'c}}, Andjelka B. and {Kubica}, Jeremy and {Lanusse}, Fran{\c{c}}ois and {Lazar}, Ilin and {Levine}, W. Garrett and {Li}, Xiaolong and {Lu}, Jing and {Luna}, Gerardo Juan Manuel and {Mahabal}, Ashish A. and {Malz}, Alex I. and {Mao}, Yao-Yuan and {Medan}, Ilija and {Moeyens}, Joachim and {Nikoli{\'c}}, Mladen and {Nikutta}, Robert and {O'Dowd}, Matt and {Olsen}, Charlotte and {Pearson}, Sarah and {Villicana Pedraza}, Ilhuiyolitzin and {Popinchalk}, Mark and {Popovi{\'c}}, Luka C. and {Pritchard}, Tyler A. and {Quint}, Bruno C. and {Radovi{\'c}}, Viktor and {Ragosta}, Fabio and {Riccio}, Gabriele and {Riley}, Alexander H. and {Ro{\.z}ek}, Agata and {S{\'a}nchez-S{\'a}ez}, Paula and {Sarro}, Luis M. and {Saunders}, Clare and {Savi{\'c}}, {\DJ}or{\dj}e V. and {Schmidt}, Samuel and {Scott}, Adam and {Shirley}, Raphael and {Smotherman}, Hayden R. and {Stetzler}, Steven and {Storey-Fisher}, Kate and {Street}, Rachel A. and {Trilling}, David E. and {Tsapras}, Yiannis and {Ustamujic}, Sabina and {van Velzen}, Sjoert and {V{\'a}zquez-Mata}, Jos{\'e} Antonio and {Venuti}, Laura and {Wyatt}, Samuel and {Yu}, Weixiang and {Zabludoff}, Ann}, doi = {10.48550/arXiv.2208.02781}, eid = {arXiv:2208.02781}, eprint = {2208.02781}, journal = {arXiv e-prints}, keywords = {Astrophysics - Instrumentation and Methods for Astrophysics}, month = aug, pages = {arXiv:2208.02781}, primaryclass = {astro-ph.IM}, title = {{From Data to Software to Science with the Rubin Observatory LSST}}, year = {2022} } - Hybrid Physical-Neural ODEs for Fast N-body SimulationsDenise Lanzieri, Francois Lanusse, and Jean-Luc StarckIn Machine Learning for Astrophysics Jul 2022

We present a new scheme to compensate for the small-scales approximations resulting from Particle-Mesh (PM) schemes for cosmological N-body simulations. This kind of simulations are fast and low computational cost realizations of the large scale structures, but lack resolution on small scales. To improve their accuracy, we introduce an additional effective force within the differential equations of the simulation, parameterized by a Fourier-space Neural Network acting on the PM-estimated gravitational potential. We compare the results for the matter power spectrum obtained to the ones obtained by the PGD scheme (Potential gradient descent scheme). We notice a similar improvement in term of power spectrum, but we find that our approach outperforms PGD for the cross-correlation coefficients, and is more robust to changes in simulation settings (different resolutions, different cosmologies).

@inproceedings{2022mla..confE..60L, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022mla..confE..60L}, archiveprefix = {arXiv}, author = {{Lanzieri}, Denise and {Lanusse}, Francois and {Starck}, Jean-Luc}, booktitle = {Machine Learning for Astrophysics}, doi = {10.48550/arXiv.2207.05509}, eid = {60}, eprint = {2207.05509}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics, Computer Science - Machine Learning}, month = jul, pages = {60}, primaryclass = {astro-ph.CO}, title = {{Hybrid Physical-Neural ODEs for Fast N-body Simulations}}, year = {2022} } - Neural Posterior Estimation with Differentiable SimulatorJustine Zeghal, Francois Lanusse, Alexandre Boucaud, and 2 more authorsIn Machine Learning for Astrophysics Jul 2022

Simulation-Based Inference (SBI) is a promising Bayesian inference framework that alleviates the need for analytic likelihoods to estimate posterior distributions. Recent advances using neural density estimators in SBI algorithms have demonstrated the ability to achieve high-fidelity posteriors, at the expense of a large number of simulations ; which makes their application potentially very time-consuming when using complex physical simulations. In this work we focus on boosting the sample- efficiency of posterior density estimation using the gradients of the simulator. We present a new method to perform Neural Posterior Estimation (NPE) with a differentiable simulator. We demonstrate how gradient information helps constrain the shape of the posterior and improves sample-efficiency.

@inproceedings{2022mla..confE..52Z, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022mla..confE..52Z}, archiveprefix = {arXiv}, author = {{Zeghal}, Justine and {Lanusse}, Francois and {Boucaud}, Alexandre and {Remy}, Benjamin and {Aubourg}, Eric}, booktitle = {Machine Learning for Astrophysics}, doi = {10.48550/arXiv.2207.05636}, eid = {52}, eprint = {2207.05636}, keywords = {Astrophysics - Instrumentation and Methods for Astrophysics, Statistics - Machine Learning}, month = jul, pages = {52}, primaryclass = {astro-ph.IM}, title = {{Neural Posterior Estimation with Differentiable Simulator}}, year = {2022} } - Galaxies on graph neural networks: towards robust synthetic galaxy catalogs with deep generative modelsYesukhei Jagvaral, Rachel Mandelbaum, Francois Lanusse, and 3 more authorsIn Machine Learning for Astrophysics Jul 2022

The future astronomical imaging surveys are set to provide precise constraints on cosmological parameters, such as dark energy. However, production of synthetic data for these surveys, to test and validate analysis methods, suffers from a very high computational cost. In particular, generating mock galaxy catalogs at sufficiently large volume and high resolution will soon become computationally unreachable. In this paper, we address this problem with a Deep Generative Model to create robust mock galaxy catalogs that may be used to test and develop the analysis pipelines of future weak lensing surveys. We build our model on a custom built Graph Convolutional Networks, by placing each galaxy on a graph node and then connecting the graphs within each gravitationally bound system. We train our model on a cosmological simulation with realistic galaxy populations to capture the 2D and 3D orientations of galaxies. The samples from the model exhibit comparable statistical properties to those in the simulations. To the best of our knowledge, this is the first instance of a generative model on graphs in an astrophysical/cosmological context.

@inproceedings{2022mla..confE..19J, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022mla..confE..19J}, archiveprefix = {arXiv}, author = {{Jagvaral}, Yesukhei and {Mandelbaum}, Rachel and {Lanusse}, Francois and {Ravanbakhsh}, Siamak and {Singh}, Sukhdeep and {Campbell}, Duncan}, booktitle = {Machine Learning for Astrophysics}, doi = {10.48550/arXiv.2212.05596}, eid = {19}, eprint = {2212.05596}, keywords = {Astrophysics - Astrophysics of Galaxies}, month = jul, pages = {19}, primaryclass = {astro-ph.GA}, title = {{Galaxies on graph neural networks: towards robust synthetic galaxy catalogs with deep generative models}}, year = {2022} } - ShapeNet: Shape constraint for galaxy image deconvolutionF. Nammour, U. Akhaury, J. N. Girard, and 4 more authorsAstronomy and Astrophysics Jul 2022

Deep learning (DL) has shown remarkable results in solving inverse problems in various domains. In particular, the Tikhonet approach is very powerful in deconvolving optical astronomical images. However, this approach only uses the \ensuremath\ell_2 loss, which does not guarantee the preservation of physical information (e.g., flux and shape) of the object that is reconstructed in the image. A new loss function has been proposed in the framework of sparse deconvolution that better preserves the shape of galaxies and reduces the pixel error. In this paper, we extend the Tikhonet approach to take this shape constraint into account and apply our new DL method, called ShapeNet, to a simulated optical and radio-interferometry dataset. The originality of the paper relies on i) the shape constraint we use in the neural network framework, ii) the application of DL to radio-interferometry image deconvolution for the first time, and iii) the generation of a simulated radio dataset that we make available for the community. A range of examples illustrates the results.

@article{2022A&A...663A..69N, adsnote = {Provided by the SAO/NASA Astrophysics Data System}, adsurl = {https://ui.adsabs.harvard.edu/abs/2022A&A...663A..69N}, archiveprefix = {arXiv}, author = {{Nammour}, F. and {Akhaury}, U. and {Girard}, J.~N. and {Lanusse}, F. and {Sureau}, F. and {Ben Ali}, C. and {Starck}, J. -L.}, doi = {10.1051/0004-6361/202142626}, eid = {A69}, eprint = {2203.07412}, journal = {Astronomy and Astrophysics}, keywords = {miscellaneous, radio continuum: galaxies, techniques: image processing, methods: data analysis, methods: numerical, Astrophysics - Instrumentation and Methods for Astrophysics, Electrical Engineering and Systems Science - Image and Video Processing}, month = jul, pages = {A69}, primaryclass = {astro-ph.IM}, title = {{ShapeNet: Shape constraint for galaxy image deconvolution}}, volume = {663}, year = {2022} } - Rubin-Euclid Derived Data Products: Initial RecommendationsLeanne P. Guy, Jean-Charles Cuillandre, Etienne Bachelet, and 118 more authorsIn Zenodo id. 5836022 Jan 2022